Material

Abstract

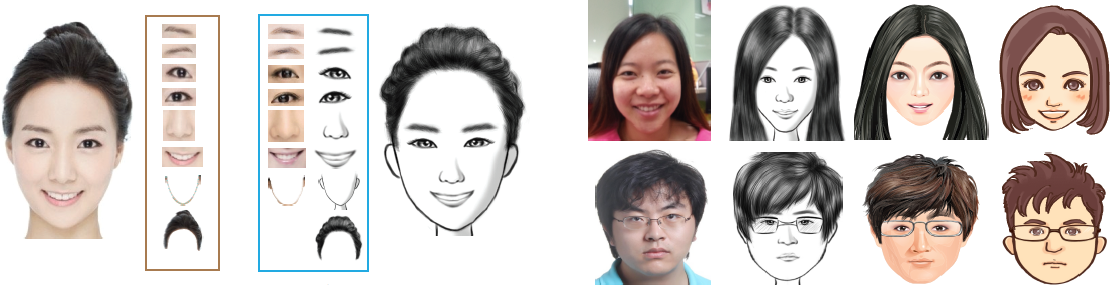

This paper presents a data-driven framework for generating cartoon-like facial representations from a given portrait image. We solve our problem by an optimization that simultaneously considers a desired artistic style, image-cartoon relationships of facial components as well as automatic adjustment of the image composition. The stylization operation consists of two steps: a face parsing step to localize and extract facial components from the input image; a cartoon generation step to cartoonize the face according to the extracted information. The components of the cartoon are assembled from a database of stylized facial components. Quantifying the similarity between facial components of input and cartoon is done by image feature matching. We incorporate prior knowledge about photo-cartoon relationships and the optimal composition of cartoon facial components extracted from a set of cartoon faces to maintain a natural and attractive look of the results.