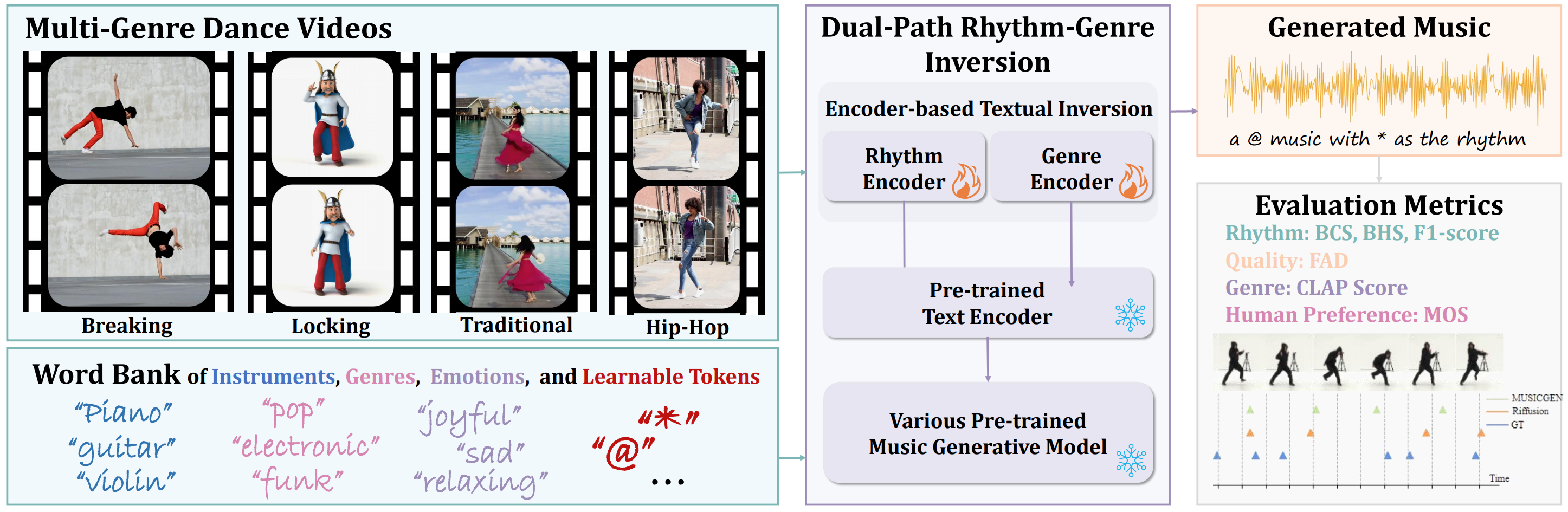

We propose dual-path rhythm-genre inversion to incorporate rhythm and genre information from a dance motion sequence into two learnable tokens, which are then used to enhance the pre-trained text-to-music models with visual control. Through encoder-based textual inversion, our method offers a plug-and-play solution for text-to-music generation models, enabling seamless integration of visual cues. The word bank comprises learnable pseudo-words (represented as “@” for genre and “*” for rhythm) and descriptive music terms such as instruments, genres, and emotions. By combining these pseudo-words and descriptive music terms, our method allows for the generation of a diverse range of music that is synchronized with the dance.

Material

Abstract

The seamless integration of music with dance movements is essential for communicating the artistic intent of a dance piece. This alignment also significantly improves the immersive quality of gaming experiences and animation productions. Although there has been remarkable advancement in creating high-fidelity music from textual descriptions, current methodologies mainly focus on modulating overall characteristics such as genre and emotional tone. They often overlook the nuanced management of temporal rhythm, which is indispensable in crafting music for dance, since it intricately aligns the musical beats with the dancers’ movements. Recognizing this gap, we propose an encoder-based textual inversion technique to augment text-to-music models with visual control, facilitating personalized music generation. Specifically, we develop dual-path rhythm-genre inversion to effectively integrate the rhythm and genre of a dance motion sequence into the textual space of a text-to-music model. Contrary to traditional textual inversion methods, which directly update text embeddings to reconstruct a single target object, our approach utilizes separate rhythm and genre encoders to obtain text embeddings for two pseudo-words, adapting to the varying rhythms and genres. We collect a new dataset called In-the-wild Dance Videos (InDV) and demonstrate that our approach outperforms state-of-the-art methods across multiple evaluation metrics. Furthermore, our method is able to adapt to changes in tempo and effectively integrates with the inherent text-guided generation capability of the pre-trained model. Our source code and demo videos are available at https://github.com/lsfhuihuiff/Dance-to-music_Siggraph_Asia_2024.